Reputation: 2812

Raytracer texture mapping leaving artifacts

So I am trying to get OBJ loading working in my raytracer. Loading OBJs works fine, but I am having some trouble with getting the texture mapping working.

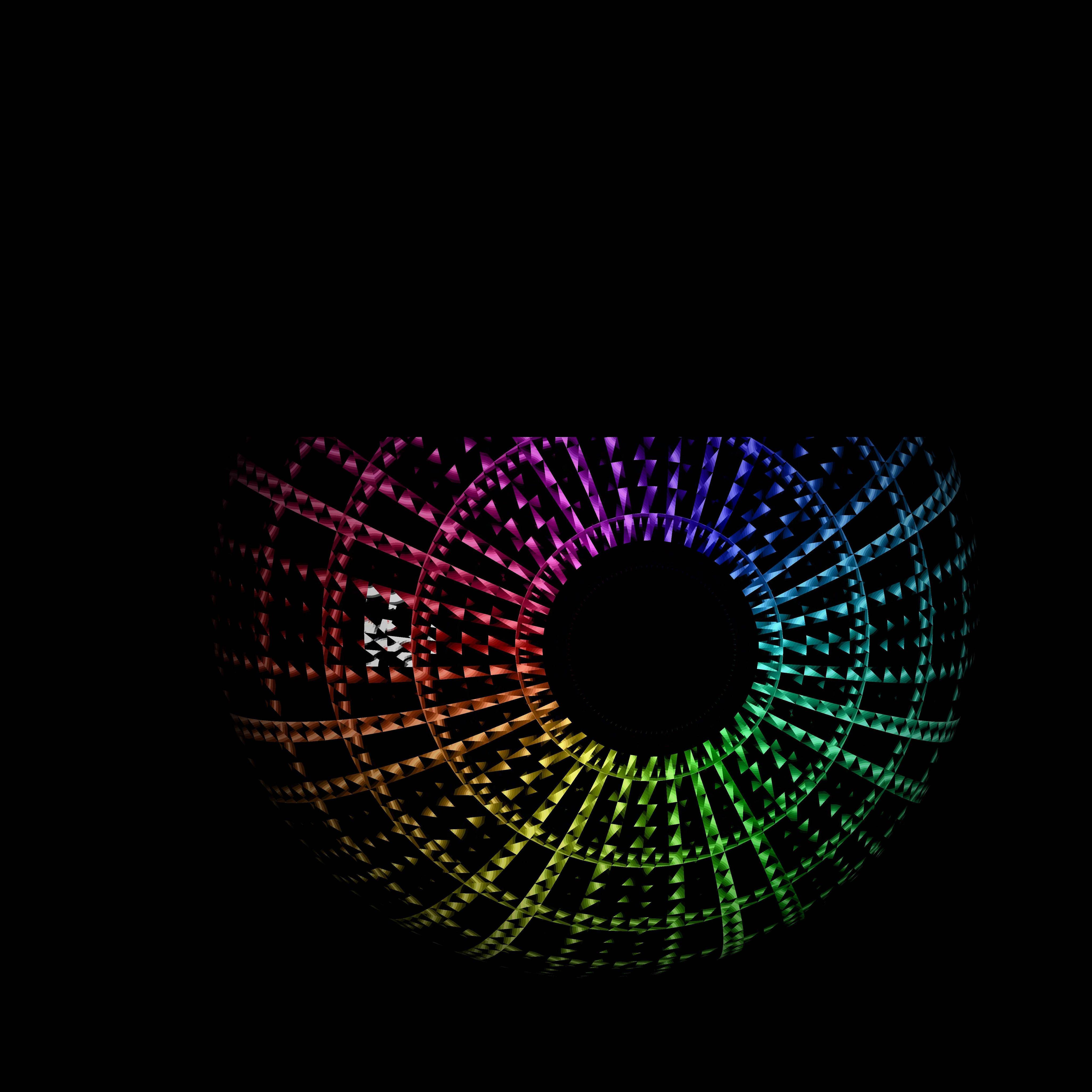

Here is an image of my result. It is supposed to be a black sphere with colored "latitude and longitude" lines, with a black spot in the middle. But it seems like every second triangle is left black. You can see the result here:

My prof said that it looks like the normals are backwards, but I don't think that is the case because the shape is still being hit - and the color of the "wrong" triangles is the color of background color of the texture (ie. black in this case).

When I load the OBJ, each vertex has a UV coord associated with it. What I do to get a UV coord when a ray hits the shape is as follows:

T: the triangle that was hit

hp: where on the triangle the ray hit

v1,v2,v3: the vertices of the triangle, each has a UV coord UV1, UV2, UV3

find the distance to each v[i] from hp (d1,d2,d3 respectively)

find the weight of each of these (w1 = d1/(d1+d2+d3), same for d2,d3)

find the weighted UV coord: UV1/w1 + UV2/w2 + UV3/w3

find the pixel color based on this weighted coord

Does anyone have any ideas what might be going on? I can post parts of my code if you think that would help.

Upvotes: 0

Views: 482

Answers (1)

Reputation: 3326

You indeed have a bug in your UV coordinates computation (regardless of whether you have an additional bug with your normals).

The reason I say that is: if you have hp very close to v1 for example, you will end up having a weight w1 very close to zero, and when you compute UV1/w1, it will tend to +infinity instead of the expected value UV1.

You thus need to look at barycentric coordinates

Upvotes: 1

Related Questions

- Texture mapping in a ray tracing for sphere in C++

- C++ raytracer bug

- Raytracer output very noisy image

- Raytracing reflection artifacts and transparency

- Artifacts and wrong code in ray tracer shading

- Wierd Raytracing Artifacts

- Ray tracing - refraction bug

- What is causing the artifacts in my raytracer?

- Raytracer Refraction Bug

- How do I use texture-mapping in a simple ray tracer?