Reputation: 43

python - find text between two $ and get them into a list

I have a text file something like -

$ abc

defghjik

am here

not now

$ you

are not

here but go there

$ ....

I want to extract text between two $ signs and put that text into a list or a dict. How can I do this in python by reading the file?

I tried regex but it gives me alternate values of the text file:

f1 = open('some.txt','r')

lines = f1.read()

x = re.findall(r'$(.*?)$', lines, re.DOTALL)

I want the output as something like below - ['abc', 'defghjik', 'am here', 'not now'] ['you', 'are not', 'here but go there']

Sorry but am new to python and trying to learn, any help appreciated! Thanks!

Upvotes: 1

Views: 84

Answers (5)

Reputation: 22483

Reading parts of files often boils down to an "iteration pattern." There are a number of generators in the itertools package that can help. Or you can craft your own generator. For example:

def take_sections(predicate, iterable, firstpost=lambda x:x):

i = iter(iterable)

try:

nextone = i.next()

while True:

batch = [ firstpost(nextone) ]

nextone = i.next()

while not predicate(nextone):

batch.append(nextone)

nextone = i.next()

yield batch

except StopIteration:

yield batch

return

this is similar to itertools.takewhile except it's more of a take until loop (i.e. test at the bottom, not the top). It also has a built in clean-up/post-process function for the first line in a section (the "section marker"). Once you've abstracted this iteration pattern, you need to read the lines in the file, define how the section markers are identified and cleaned up, and run the generator:

with open('some.txt','r') as f1:

lines = [ l.strip() for l in f1.readlines() ]

dollar_line = lambda x: x.startswith('$')

clean_dollar_line = lambda x: x[1:].lstrip()

print list(take_sections(dollar_line, lines, clean_dollar_line))

Yielding:

[['abc', 'defghjik', 'am here', 'not now'],

['you', 'are not', 'here but go there'],

['....']]

Upvotes: 0

Reputation: 915

$ holds a special meaning in a regex. It is an anchor. It matches the end of the string or just before the newline at the end of the string. See here :

Regular Expression Operations

You can escape the $ sign by prefixing it with a '\' character, so it won't be treated as an anchor.

Better yet, you don't need to use regex at all here. You can use the split method of strings in python.

>>> string = '''$ abc

defghjik

am here

not now

$ you

are not

here but go there

$ '''

>>> string.split('$')

['', ' abc\ndefghjik\nam here\nnot now\n', ' you\nare not\nhere but go there\n', ' ']

And you get a list. To remove the empty string entries if you want, you can do this:

a=string.split('$')

while a.count('') > 0:

a.remove('')

Upvotes: 0

Reputation: 1210

Regex may not actually be what you want: your desired output has every line as an individual entry in a list. I'd suggest just using lines.split(), and then iterating over the resulting array.

I'll write this as if you just need to print the text you want as output. Adapt as necessary.

f1 = open('some.txt','r')

lines = f1.read()

lists = []

for s in lines.split('\n'):

if s == '$':

if lists:

print lists

lists = []

else: lists.append(s)

if lists: print lists

Happy Python-ing! Welcome to the club. :)

Upvotes: 0

Reputation: 251136

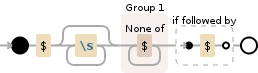

$ has a special meaning in regex, so to match it you need to escape it first. Note that inside a character class([]), $ and other metcharatcers lose their special meaning, so no escaping required there. Following regex should do it:

\$\s*([^$]+)(?=\$)

Demo:

>>> lines = '''$ abc

defghjik

am here

not now

$ you

are not

here but go there

$'''

>>> it = re.finditer(r'\$\s*([^$]+)(?=\$)', lines, re.DOTALL)

>>> [x.group(1).splitlines() for x in it]

[['abc', 'defghjik', 'am here', 'not now'], ['you', 'are not', 'here but go there']]

Upvotes: 1

Reputation: 70742

In regular expressions $ is a character of special meaning and needs to be escaped to match a literal character. Also to match multiple parts I would use a lookahead (?=...) assertion to assert matching a literal $ character.

>>> x = re.findall(r'(?s)\$\s*(.*?)(?=\$)', lines)

>>> [i.splitlines() for i in x]

[['abc', 'defghjik', 'am here', 'not now'], ['you', 'are not', 'here but go there']]

Upvotes: 1

Related Questions

- Find all words in a string that start with the $ sign in Python

- Python 3 regular expression for $ but not $$ in a string

- How to identify a word with a dollar sign $

- Match literal string '\$'

- regex that extracts $ and % values from the string

- How to match the string in $(....) in python

- print out all strings between $ and *+2 characters in python

- Capture $ in regex Python

- Strings containing $ not being found in Python

- Regex for getting content between $ chars from a text