Reputation: 840

python multiprocessing - single file multiple commands

I've a requirement of processing a file which contains some 100 shell (bash) commands; each line has a separate shell command. I have to execute these commands parallely (like 10 commands in parallel or 20, let the CPU decide how to do that in parallel). I honestly don't know how to accomplish it so I took a code somewhere around here only; below is the same:

from subprocess import PIPE

import subprocess

import time

def submit_job_max_len(job_list, max_processes):

sleep_time = 0.1

processes = list()

for command in job_list:

print 'running process# {n}. Submitting {proc}.'.format(n=len(processes),

proc=str(command))

processes.append(subprocess.Popen(command, shell=False, stdout=None, stdin=PIPE))

while len(processes) >= max_processes:

time.sleep(sleep_time)

processes = [proc for proc in processes if proc.poll() is None]

while len(processes) > 0:

time.sleep(sleep_time)

processes = [proc for proc in processes if proc.poll() is None]

cmd = 'cat runCommands.sh'

job_list = ((cmd.format(n=i)).split() for i in range(5))

submit_job_max_len(job_list, max_processes=10)

I don't understand the last 3 lines as to what actually it's doing. My test runs show that the number in range(n) will execute ever line that many times. So if the number is 5, then every line is executed 5 times. I don't want that. Can someone throw some light on this please. And again, please excuse my ignorance here.

Upvotes: 1

Views: 393

Answers (2)

Reputation: 33685

GNU Parallel is made for you:

cat the_file | parallel

By default it will run one job per cpu-core. This can be adjusted with --jobs.

GNU Parallel is a general parallelizer and makes is easy to run jobs in parallel on the same machine or on multiple machines you have ssh access to.

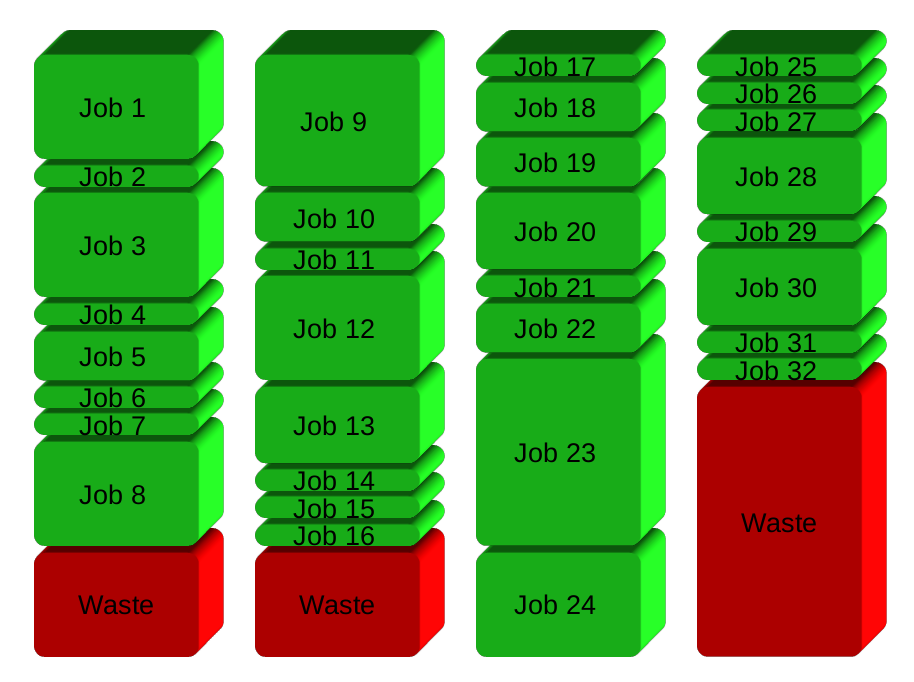

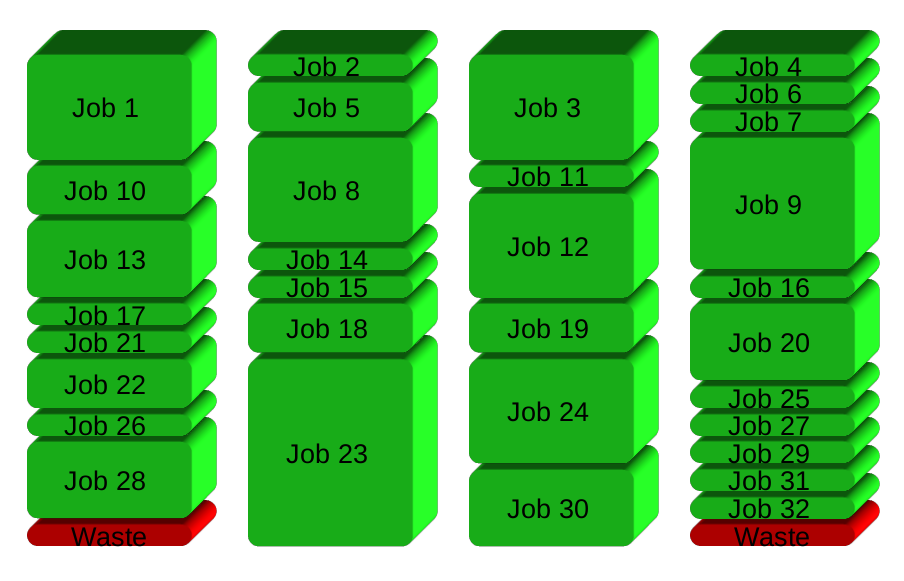

If you have 32 different jobs you want to run on 4 CPUs, a straight forward way to parallelize is to run 8 jobs on each CPU:

GNU Parallel instead spawns a new process when one finishes - keeping the CPUs active and thus saving time:

Installation

For security reasons you should install GNU Parallel with your package manager, but if GNU Parallel is not packaged for your distribution, you can do a personal installation, which does not require root access. It can be done in 10 seconds by doing this:

(wget -O - pi.dk/3 || curl pi.dk/3/ || fetch -o - http://pi.dk/3) | bash

For other installation options see http://git.savannah.gnu.org/cgit/parallel.git/tree/README

Learn more

See more examples: http://www.gnu.org/software/parallel/man.html

Watch the intro videos: https://www.youtube.com/playlist?list=PL284C9FF2488BC6D1

Walk through the tutorial: http://www.gnu.org/software/parallel/parallel_tutorial.html

Sign up for the email list to get support: https://lists.gnu.org/mailman/listinfo/parallel

Upvotes: 1

Reputation: 2413

What you need is a queue.

Use the multiprocessing package to start a set of processes. There are several examples which show how to do this.

One neat trick is to use a poison pill to ensure each of the processes is killed once the queue is empty. Search the python module of the week for examples on this.

Best of luck.

Upvotes: 0

Related Questions

- running multiple external commands in parallel in Python

- Multiprocess several files

- How to properly use multiprocessing in Python by using multiple files?

- python executing multiple commands for multiprocessing

- Processing single file from multiple processes

- How to run multiple python files at the same time?

- Multiprocessing in python, work with several files

- python multiprocessing across different files

- Python Process Multiple Files

- subprocess + multiprocessing - multiple commands in sequence