Reputation: 318

Can I provide multiple targets to a seq2seq model?

I'm doing video captioning on MSR-VTT dataset

In this dataset, I've got 10,000 videos and, for each videos, I've got 20 different captions.

My model consists of a seq2seq RNN. Encoder's inputs are the videos features, decoder's inputs are embedded target captions and decoder's output are predicted captions.

I'm wondering if using several time the same videos with different captions is useful, or not.

Since I couldn't find explicit info, I tried to benchmark it

Benchmark:

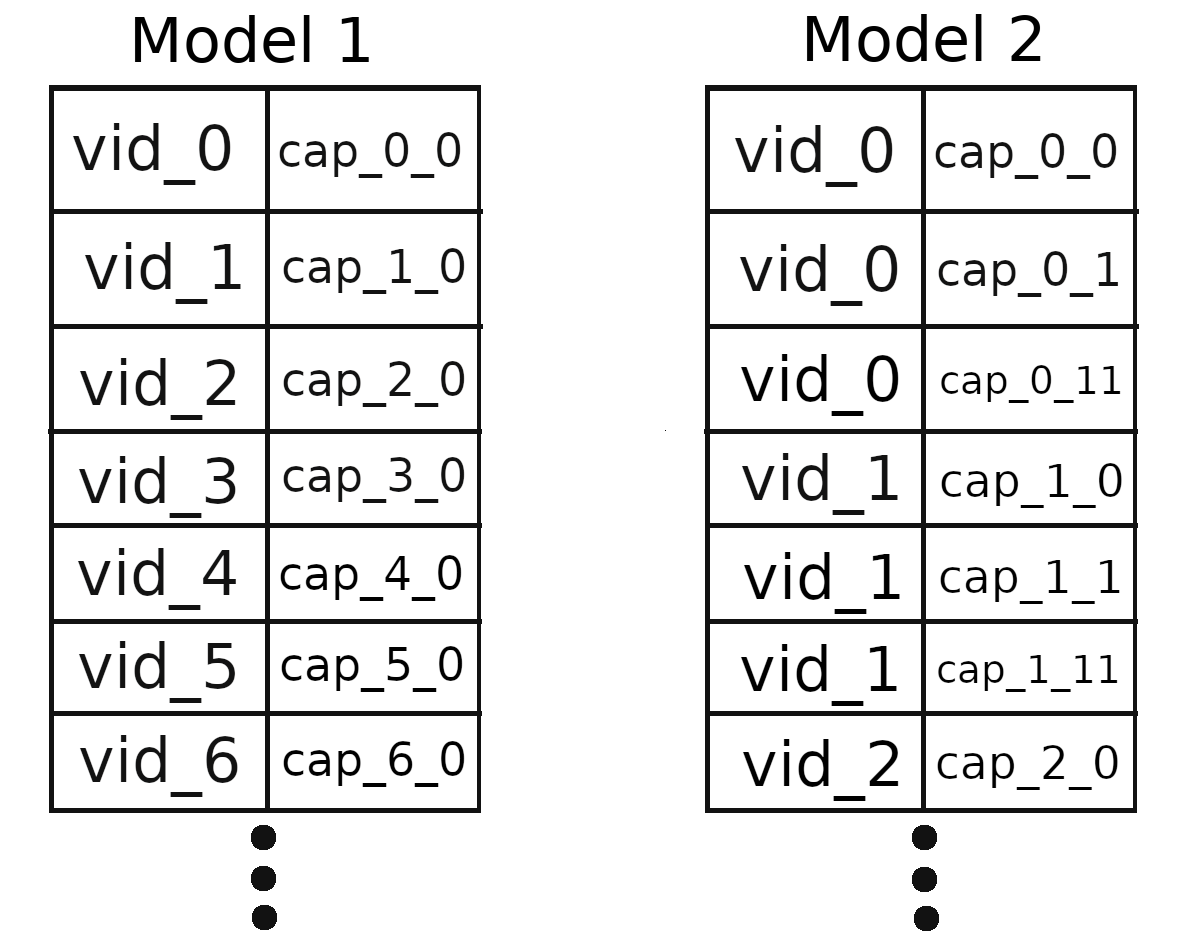

Model 1: One caption for each video

I trained it on 1108 sport videos, with a batch size of 5, over 60 epochs. This configuration takes about 211 seconds per epochs.

Epoch 1/60 ; Batch loss: 5.185806 ; Batch accuracy: 14.67% ; Test accuracy: 17.64%

Epoch 2/60 ; Batch loss: 4.453338 ; Batch accuracy: 18.51% ; Test accuracy: 20.15%

Epoch 3/60 ; Batch loss: 3.992785 ; Batch accuracy: 21.82% ; Test accuracy: 54.74%

...

Epoch 10/60 ; Batch loss: 2.388662 ; Batch accuracy: 59.83% ; Test accuracy: 58.30%

...

Epoch 20/60 ; Batch loss: 1.228056 ; Batch accuracy: 69.62% ; Test accuracy: 52.13%

...

Epoch 30/60 ; Batch loss: 0.739343; Batch accuracy: 84.27% ; Test accuracy: 51.37%

...

Epoch 40/60 ; Batch loss: 0.563297 ; Batch accuracy: 85.16% ; Test accuracy: 48.61%

...

Epoch 50/60 ; Batch loss: 0.452868 ; Batch accuracy: 87.68% ; Test accuracy: 56.11%

...

Epoch 60/60 ; Batch loss: 0.372100 ; Batch accuracy: 91.29% ; Test accuracy: 57.51%

Model 2: 12 captions for each video

Then I trained the same 1108 sport videos, with a batch size of 64.

This configuration takes about 470 seconds per epochs.

Since I've 12 captions for each videos, the total number of samples in my dataset is 1108*12.

That's why I took this batch size (64 ~= 12*old_batch_size). So the two models launch the optimizer the same number of times.

Epoch 1/60 ; Batch loss: 5.356736 ; Batch accuracy: 09.00% ; Test accuracy: 20.15%

Epoch 2/60 ; Batch loss: 4.435441 ; Batch accuracy: 14.14% ; Test accuracy: 57.79%

Epoch 3/60 ; Batch loss: 4.070400 ; Batch accuracy: 70.55% ; Test accuracy: 62.52%

...

Epoch 10/60 ; Batch loss: 2.998837 ; Batch accuracy: 74.25% ; Test accuracy: 68.07%

...

Epoch 20/60 ; Batch loss: 2.253024 ; Batch accuracy: 78.94% ; Test accuracy: 65.48%

...

Epoch 30/60 ; Batch loss: 1.805156 ; Batch accuracy: 79.78% ; Test accuracy: 62.09%

...

Epoch 40/60 ; Batch loss: 1.449406 ; Batch accuracy: 82.08% ; Test accuracy: 61.10%

...

Epoch 50/60 ; Batch loss: 1.180308 ; Batch accuracy: 86.08% ; Test accuracy: 65.35%

...

Epoch 60/60 ; Batch loss: 0.989979 ; Batch accuracy: 88.45% ; Test accuracy: 63.45%

Here is the intuitive representation of my datasets:

How can I interprete this results ?

When I manually looked at the test predictions, Model 2 predictions looked more accurate than Model 1 ones.

In addition, I used a batch size of 64 for Model 2. That means that I could obtain even more good results by choosing a smaller batch size. It seems I can't have better training method for Mode 1 since batch size is already very low

On the other hand, Model 1 have better loss and training accuracy results...

What should I conclude ?

Does the Model 2 constantly overwrites the previously trained captions with the new ones instead of adding new possible captions ?

Upvotes: 0

Views: 261

Answers (1)

Reputation: 193

I'm wondering if using several time the same videos with different captions is useful, or not.

I think it is definately. It can be interpreted as video to captions is not one-to-one mapped. And thus weights gets trained more based on the video context.

Since the video to caption is not one to one mapped. So even if the neural network is indefinitely dense it should never achieve 100% training accuracy(or loss as zero) thus reducing overfitting significantly.

When I manually looked at the test predictions, Model 2 predictions looked more accurate than Model 1 ones.

Nice! Same is visible here:

Model1; Batch accuracy: 91.29% ; Test accuracy: 57.51%

Model2; Batch accuracy: 88.45% ; Test accuracy: 63.45%

Increasing Generalization!!

In addition, I used a batch size of 64 for Model 2. That means that I could obtain even more good results by choosing a smaller batch size. It seems I can't have better training method for Mode 1 since batch size is already very low.

I might not be the right person to comment on the value of the batch_size here, but increasing it a bit more should be worth a try.

batch_size is a balance between moving the previous knowledge towards current batch(trying to converge in different directions after some time based on the learning rate) vs trying to learn similar knowledge again and again(converging in almost same direction).

And remember there are lot of other ways to improve the results.

On the other hand, Model 1 have better loss and training accuracy results... What should I conclude ? ?

Training accuracy results and loss value tells about how the model is performing on the training data not on the validation/test data. In other words, having very small value of loss might mean memorization.

Does the Model 2 constantly overwrites the previously trained captions with the new ones instead of adding new possible captions.

Depends on how data is being splitted into batches. Is multiple caption of the same video in same batch or spreaded over multiple batches.

Remember, Model 2 has multiple caption which might be a major factor behind generalization. Thus increasing the training loss value.

Thanks!

Upvotes: 1

Related Questions

- Concatening an attention layer with decoder input seq2seq model on Keras

- RNN with multiple input sequences for each target

- How to provide multiple targets to a Seq2Seq model?

- Multilayer Seq2Seq model with LSTM in Keras

- Preprocessing for seq2seq model

- tensorflow seq2seq with multiple outputs

- How to have a multidimensional input rnn with legacy_seq2seq

- Training trained seq2seq model on additional training data

- Tensorflow Sequence to sequence model using the seq2seq API ( ver 1.1 and above)

- Tensorflow re-use rnn_seq2seq model