Reputation: 8528

Perl or Bash threadpool script?

I have a script - a linear list of commands - that takes a long time to run sequentially. I would like to create a utility script (Perl, Bash or other available on Cygwin) that can read commands from any linear script and farm them out to a configurable number of parallel workers.

So if myscript is

command1

command2

command3

I can run:

threadpool -n 2 myscript

Two threads would be created, one commencing with command1 and the other command2. Whichever thread finishes its first job first would then run command3.

Before diving into Perl (it's been a long time) I thought I should ask the experts if something like this already exists. I'm sure there should be something like this because it would be incredibly useful both for exploiting multi-CPU machines and for parallel network transfers (wget or scp). I guess I don't know the right search terms. Thanks!

Upvotes: 7

Views: 1359

Answers (5)

Reputation: 51

Source: http://coldattic.info/shvedsky/pro/blogs/a-foo-walks-into-a-bar/posts/7

# That's commands.txt file

echo Hello world

echo Goodbye world

echo Goodbye cruel world

cat commands.txt | xargs -I CMD --max-procs=3 bash -c CMD

Upvotes: 0

Reputation: 33740

If you need the output not to be mixed up (which xargs -P risks doing), then you can use GNU Parallel:

parallel -j2 ::: command1 command2 command3

Or if the commands are in a file:

cat file | parallel -j2

GNU Parallel is a general parallelizer and makes is easy to run jobs in parallel on the same machine or on multiple machines you have ssh access to.

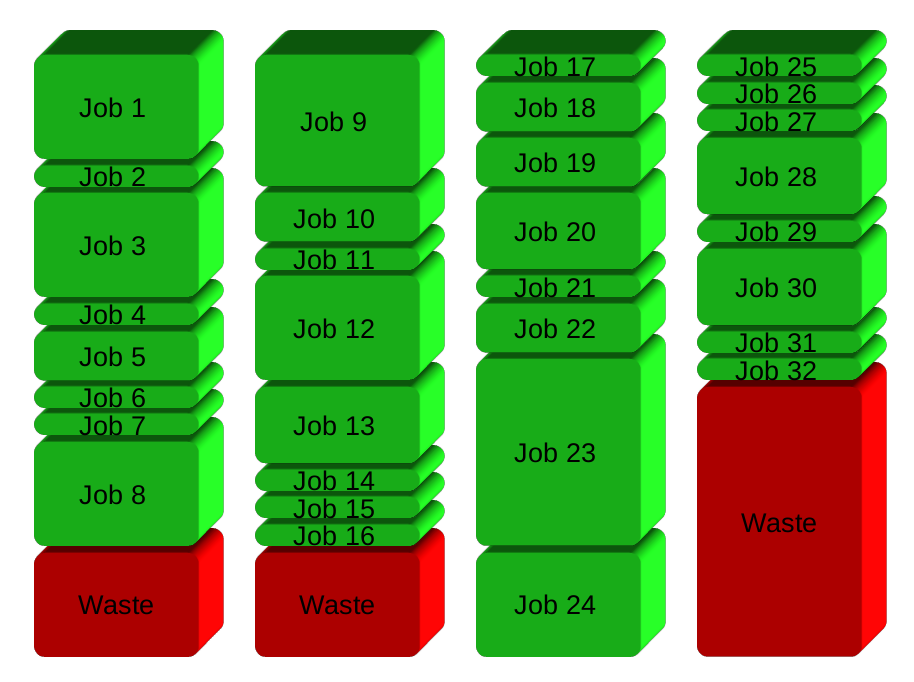

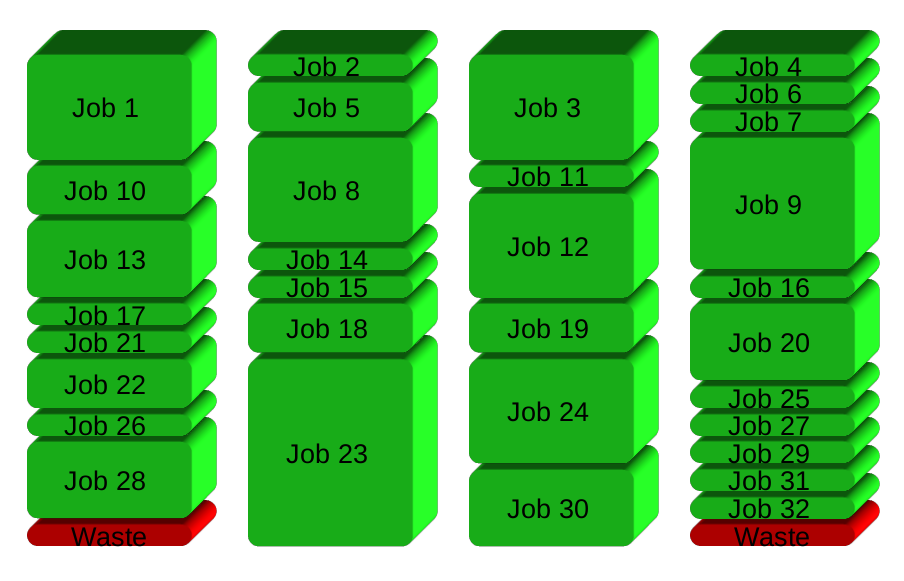

If you have 32 different jobs you want to run on 4 CPUs, a straight forward way to parallelize is to run 8 jobs on each CPU:

GNU Parallel instead spawns a new process when one finishes - keeping the CPUs active and thus saving time:

Installation

If GNU Parallel is not packaged for your distribution, you can do a personal installation, which does not require root access. It can be done in 10 seconds by doing this:

(wget -O - pi.dk/3 || curl pi.dk/3/ || fetch -o - http://pi.dk/3) | bash

For other installation options see http://git.savannah.gnu.org/cgit/parallel.git/tree/README

Learn more

See more examples: http://www.gnu.org/software/parallel/man.html

Watch the intro videos: https://www.youtube.com/playlist?list=PL284C9FF2488BC6D1

Walk through the tutorial: http://www.gnu.org/software/parallel/parallel_tutorial.html

Sign up for the email list to get support: https://lists.gnu.org/mailman/listinfo/parallel

Upvotes: 4

Reputation: 26861

You could also use make. Here is a very interesting article on how to use it creatively

Upvotes: 0

Reputation: 393653

There is xjobs which is better at separating individual job output then xargs -P.

http://www.maier-komor.de/xjobs.html

Upvotes: 1

Reputation: 48212

In Perl you can do this with Parallel::ForkManager:

#!/usr/bin/perl

use strict;

use warnings;

use Parallel::ForkManager;

my $pm = Parallel::ForkManager->new( 8 ); # number of jobs to run in parallel

open FILE, "<commands.txt" or die $!;

while ( my $cmd = <FILE> ) {

$pm->start and next;

system( $cmd );

$pm->finish;

}

close FILE or die $!;

$pm->wait_all_children;

Upvotes: 3

Related Questions

- What are the alternatives to Perl interpreter threads?

- Run bash scripts from Perl threads

- How to use threads in Perl?

- multithreading or forking in perl

- What modules should I look at for doing multithreading in Perl?

- Threading vs Forking (with explanation of what I want to do)

- Multithreading for perl code

- Perl scripts, to use forks or threads?

- Multi-Threading in Perl vs Java

- What recommended multi-thread managers exist for Perl?