Reputation: 67

How can I sequentially process jobs that are executed on multiple EC2 instances?

Currently, there are multiple jobs (shell scripts) running on multiple EC2 instance. EC2s are not linked to each other. Jobs are running at a set time by cron.

What service/configuration should I use to confirm that job A has finished on EC2 and run job B on another EC2?

(If job A on EC2 does not finish, job B on another EC2 will not be executed.)

It would be helpful if you could also give me some pages to refer to.

Upvotes: 1

Views: 731

Answers (2)

Reputation: 12075

There are multiple options having different features.

As already mentioned in the previous answer, one option is chaining the jobs through queues. Simple, reliable, but quiet rigid. Maybe feasible for all your needs.

If you need more complexity, control and insight, you may use AWS Step Functions.

Upvotes: 1

Reputation: 269470

You haven't provided much information at all, so it is difficult to recommend options.

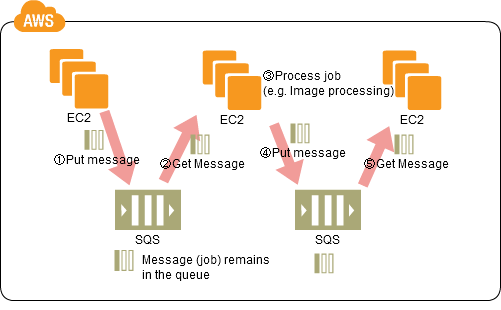

However, you might consider using multiple Amazon SQS queues.

Each EC2 instance should pull 'work' from a different queue, eg:

- Instance A pulls from Queue A

- Instance B pulls from Queue B

- etc

The 'worker' code on each instance would:

- Pull a message off its queue (which causes the message to become invisible for a defined period)

- Process the work

- Send a message to the next queue

- Delete the message from its queue (to indicate that it is finished)

If a worker fails to process a message within the 'invisibility period', Amazon SQS will make the message visible again for a worker to process, or (based upon your queue settings) move it to a Dead Letter Queue for forensic investigation.

This is know as the CDP:Queuing Chain Pattern - AWS-CloudDesignPattern:

If each individual job was relatively small, then a better pattern would be to use AWS Lambda functions rather than Amazon EC2 instances. That way, when there is no work in the queue, there are no costs incurred.

Alternatively, it would be useful if each EC2 instance could act as any worker, rather than only one specific worker. This would avoid potential blockages if one process takes significantly more time than other processes. The worker would pull from the latest queue possible, like a reverse version of CDP:Priority Queue Pattern - AWS-CloudDesignPattern.

Upvotes: 2

Related Questions

- AWS BATCH - how to run more concurrent jobs

- run a job after multiple dependent jobs completed in aws glue

- Launch multiple EC2 instances in parallel

- AWS ECS places one task per EC2 instance

- AWS batch to always launch new ec2 instance for each job

- What is the most efficient way to run scheduled commands on multiple EC2 instances?

- How to run shell script on multiple EC2 instances using AWS CLI?

- Running Batch Jobs on Amazon ECS

- Have multiple ec2 instances execute remote script

- Run script on multiple ec2 instances