Reputation: 179

PyAudio - first few chunks of recording are zero

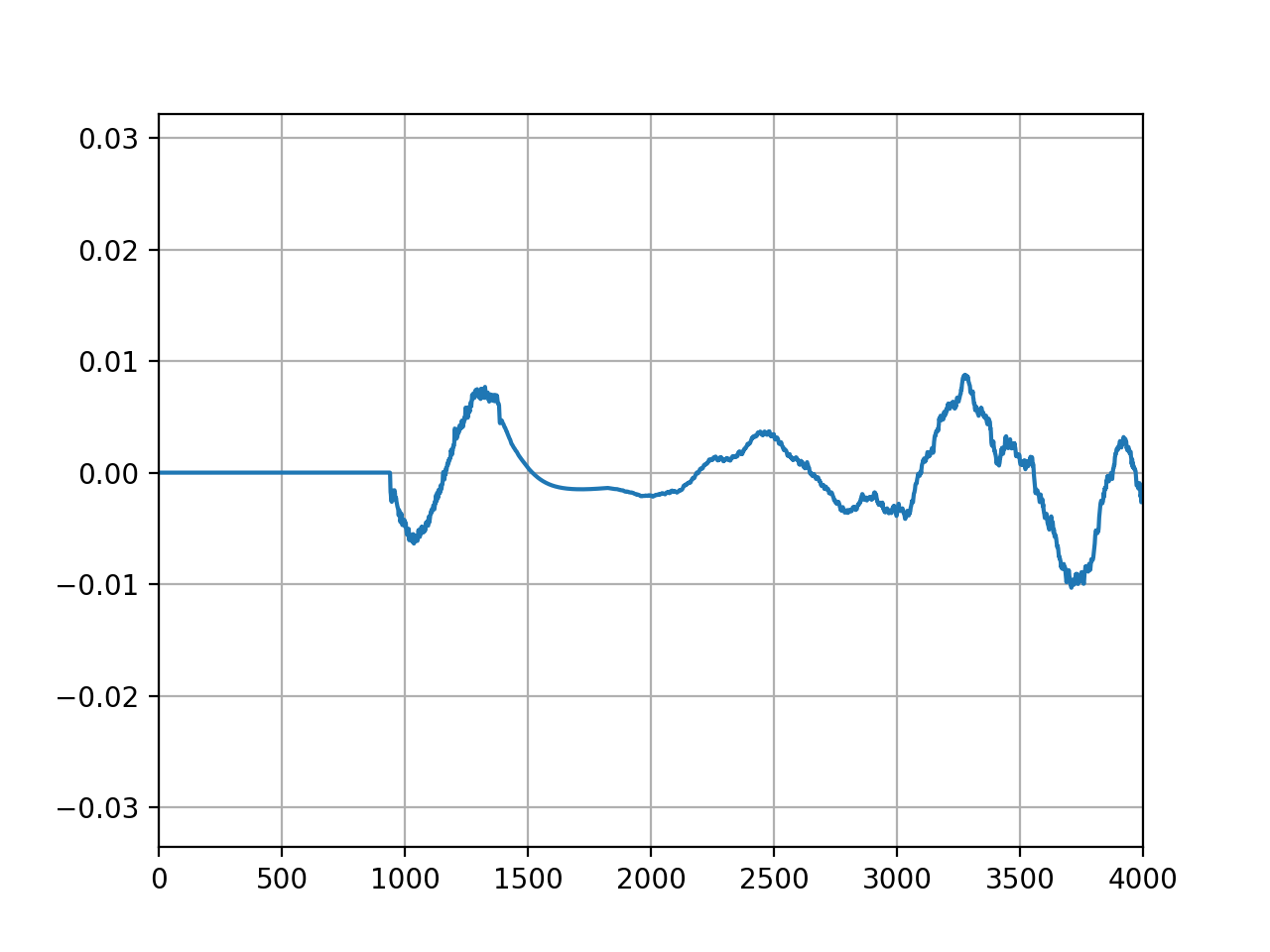

I've been having some issues when trying to synchronously playback and record audio to/from a device, in this case, my laptop speakers and microphone.

The problem

I've tried to implement this using the Python modules: "sounddevice" and "pyaudio"; but both implementations have this weird issue where the first few frames of recorded audio are always zero. Has anyone else experienced this type of issue? This issue seems to be independent of the chunksize that is used (i.e., its always the same amount of samples being zero).

Is there anything I can do to prevent this from happening?

Code

import queue

import matplotlib.pyplot as plt

import numpy as np

import pyaudio

import soundfile as sf

FRAME_SIZE = 512

excitation, fs = sf.read("excitation.wav", dtype=np.float32)

# Instantiate PyAudio

p = pyaudio.PyAudio()

q = queue.Queue()

output_idx = 0

mic_buffer = np.zeros((excitation.shape[0] + FRAME_SIZE

- (excitation.shape[0] % FRAME_SIZE), 1))

def rec_play_callback(in_data, framelength, time_info, status):

global output_idx

# print status of playback in case of event

if status:

print(f"status: {status}")

chunksize = min(excitation.shape[0] - output_idx, framelength)

# write data to output buffer

out_data = excitation[output_idx:output_idx + chunksize]

# write input data to input buffer

inputsamples = np.frombuffer(in_data, dtype=np.float32)

if not np.sum(inputsamples):

print("Empty frame detected")

# send input data to buffer for main thread

q.put(inputsamples)

if chunksize < framelength:

out_data[chunksize:] = 0

return (out_data.tobytes(), pyaudio.paComplete)

output_idx += chunksize

return (out_data.tobytes(), pyaudio.paContinue)

# Define playback and record stream

stream = p.open(rate=fs,

channels=1,

frames_per_buffer=FRAME_SIZE,

format=pyaudio.paFloat32,

input=True,

output=True,

input_device_index=1, # Macbook Pro microphone

output_device_index=2, # Macbook Pro speakers

stream_callback=rec_play_callback)

stream.start_stream()

input_idx = 0

while stream.is_active():

data = q.get(timeout=1)

mic_buffer[input_idx:input_idx + FRAME_SIZE, 0] = data

input_idx += FRAME_SIZE

stream.stop_stream()

stream.close()

p.terminate()

# Plot captured microphone signal

plt.plot(mic_buffer)

plt.show()

Output

Empty frame detected

Edit: running this on MacOS using CoreAudio. This might be relevant, as pointed out by @2e0byo.

Upvotes: 7

Views: 911

Answers (2)

Reputation: 786

For my future self.

Got a workaround. After stream.start_stream(), add

while not any(stream.read(1)):

pass

If you value the one non-zero chunk that terminates the loop, store it:

while not any(v := stream.read(1)):

pass

After that, v is an instance of bytes, the length depends on the stream format. As it's a 32-bit float in the question, len(v) is 4.

Upvotes: 0

Reputation: 2525

This is a general question, and we are missing a complete view of your architecture. So the best we can do is point to some general concepts.

In digital signal processing systems, there is very often a leading blank and a constant delay in the processed signal. This is most often related to the size of a buffer and the sampling rate. In some systems you may not even be aware that the buffer is there, for example as part of a device driver that is not accessible to the user level API.

To reduce an offset due to buffering, you have to make the buffer smaller or sample faster. Your system then has to process smaller packets but more often, and changes in either packet size or sampling clock, can effect your signal processing, depending on your signal content and the kind of signal processing you are doing. So making either of these changes comes with an increase in the overhead per data processed through the system and may also effect performance in other ways.

An approach that I use for debugging problems of this sort is to try to find the buffer that is setting the offset, tracing through source code if needed, and then see whether you can adjust size or sampling rate and still achieve the performance that you need in terms of throughput and accuracy.

Upvotes: 0

Related Questions

- PyAudio - Latency variations on Windows 10

- PyAudio Responsive Recording

- Smooth Audio with PyAudio

- How to get accurate timing using microphone in python

- Why does PyAudio only play half my audio?

- PyAudio playback slow on linux

- Speeding up the sound processing algorithm

- Stuttering audio with PyAudio on Raspberry Pi

- Inexplicable lag in class for audio sampling with PyAudio

- PyAudio not capturing correct audio data