Reputation: 3072

How to tell if a bunch of shapes are all mirror images using OpenCV?

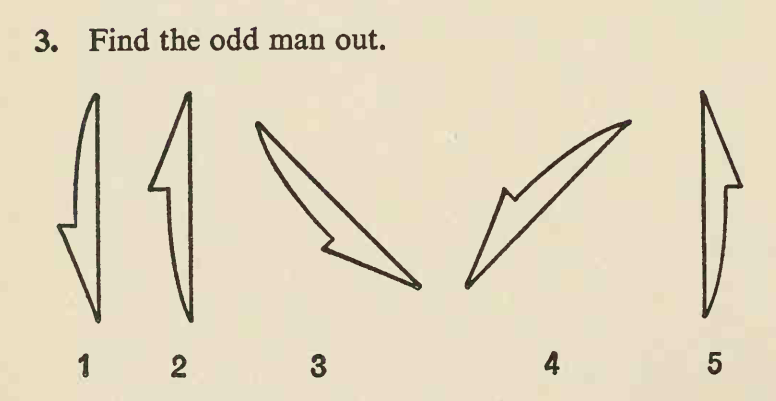

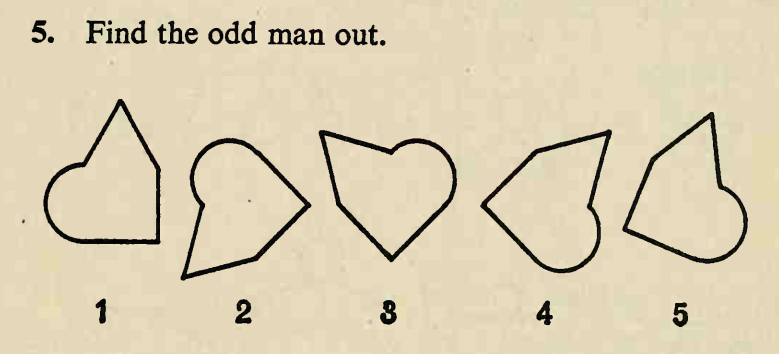

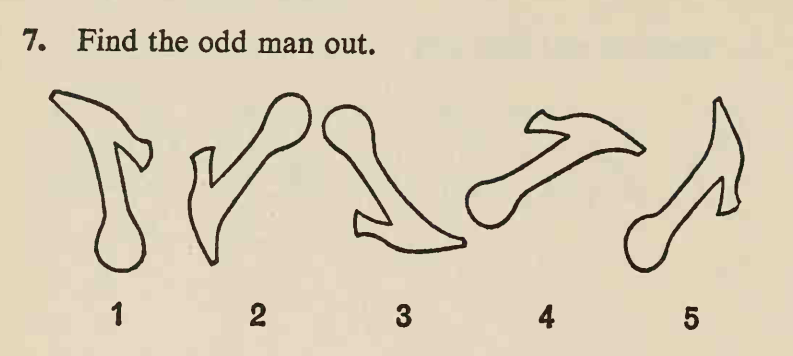

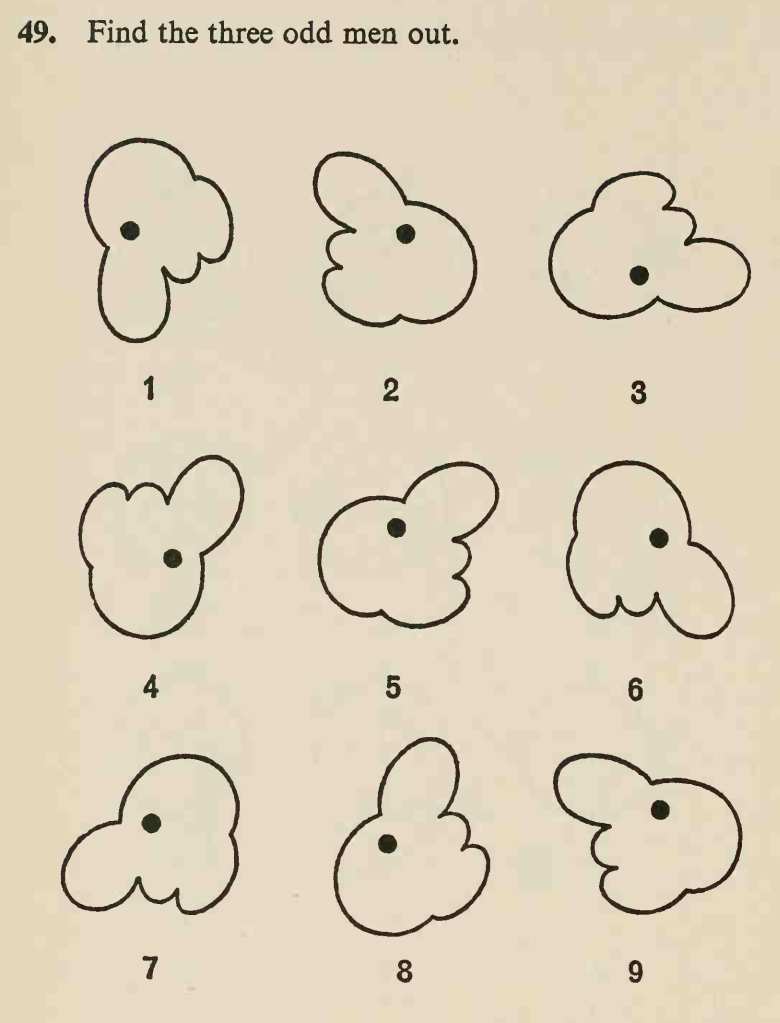

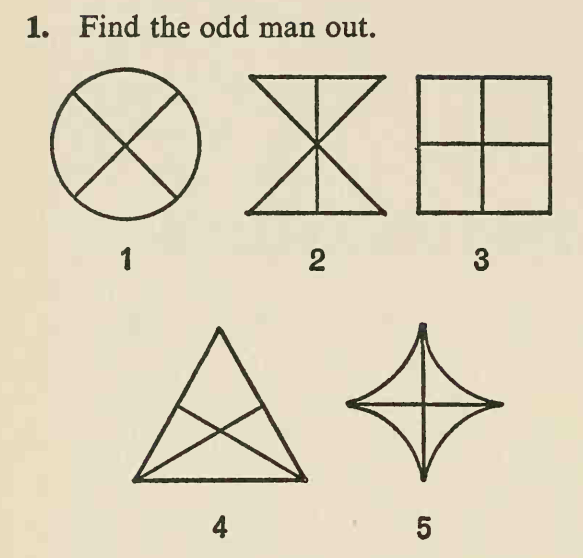

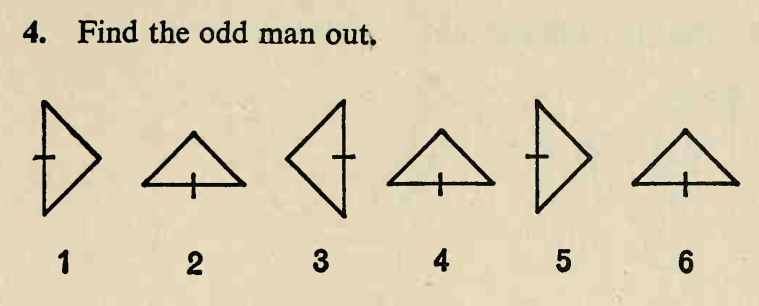

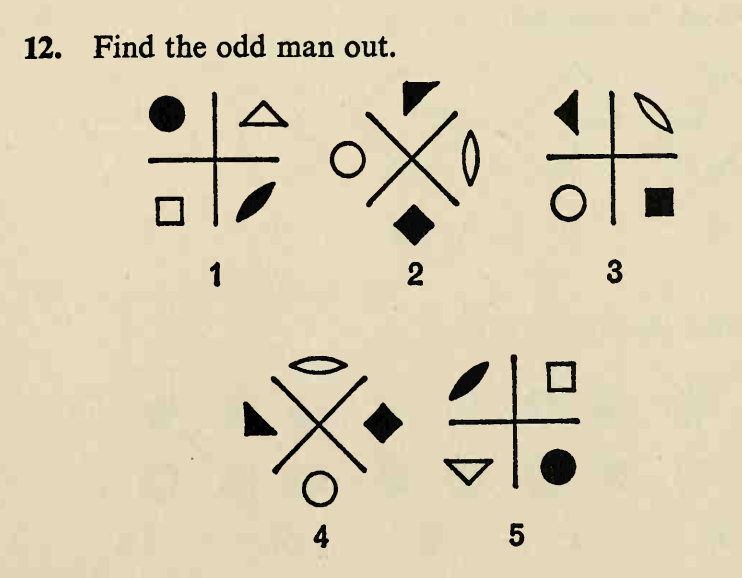

I had taken an online visual IQ test, in it a lot of questions are like the following:

The addresses of the images are:

[f"https://www.idrlabs.com/static/i/eysenck-iq/en/{i}.png" for i in range(1, 51)]

In these images there are several shapes that are almost identical and of nearly the same size. Most of these shapes can be obtained from the others by rotation and translation, but there are some shapes that cannot superpose the others via rotation and translation only, and must involve reflection, these shapes have a different chirality from the others, and they are "the odd men". The task is to find them.

The answers here are 2, 1, and 4, respectively. In these questions, you are tasked to find n shapes that have a different chirality from the others, there are always at least 2n + 1 shapes. Yes, sometimes you are tasked to find multiple shapes that are different:

In the above question, the answers are 5, 6 and 8.

I wish to automate it, and I am extremely close to success, but there is one single problem.

I have written code to load the image from the address, find contours, eliminate the contours that are too small, find the minimum area bounding boxes, and extract the shapes.

I extract the shapes by rotating the image so that the bounding box is straight, and calculating the new coordinates of the bounding box after rotation, and then using index slicing to extract the shape. I also use bit masking with the filled convex hull of the contour to remove extra bits.

I have even written a function to sort the bounding boxes left to right, top to bottom. I do this by repeatedly finding the box that is at the top, and remove it from the list, calculate its radius, then for each remaining box, check if its center is between top-y - radius and top-y + radius, if true, then remove the current box from the list and add it to the current row, then sort the current row by x and concatenate the current row with the result, and then find the top box again... until the list is empty.

I have written code to calculate the Hu Moments, and I have found if the shapes have different chirality then their seventh Hu Moment have opposite signs, and if they have the same chirality then their seventh Hu Moment have the same sign.

So the task is to split the shapes into two groups by their seventh Hu Moment sign and identify whichever group has fewer members. It is extremely easy. Because it is so easy I won't show how I do it here.

But here is the hard part, how to tell whether or not all the shapes are almost identical, that is, they can superpose one another via translation, rotation, and reflection?

All above shapes are identical to others in the same group, problem is, the questions aren't always like this.

In fact the very first question isn't like this:

The most obvious answer is 2, because it has 3 segments that cross its center unlike the others which have 2 segments. And as you can see, in it the shapes are different.

Also the fourth question:

The most obvious answer is 3 because the pattern is right up repeat and 3 breaks the pattern. But as you can see, these are all of the same chirality. This is easy to deal with.

And there are questions that contain shapes that are extremely similar but not the same, such as:

By the way, in it, the program find more than 5 contours because the small geometric figures are counted as distinct shapes, despite the fact that they are obviously intend to group into five groups.

So how do I determine if the shapes are identical? Obviously this is going to involve some heuristics, but using what heuristics?

I have calculated the log10 scaled Hu Moments of each shapes, and the mean, standard deviation, and norm of the moments, I have also written a function to compare every pair of images in a group using cv2.matchShapes, and calculated the mean, std-dev, norm of all the pairs associated with each element:

import cv2

import requests

import numpy as np

def grayscale(image):

return cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

def get_contours(image):

_, thresh = cv2.threshold(grayscale(image), 128, 255, 0)

thresh = ~thresh

contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

return contours

def sort_bounding_rectangles(rectangles):

l = len(rectangles)

remaining = list(range(l))

rows = []

while remaining:

top = min(((rectangles[i], i) for i in remaining), key=lambda x: x[0][0][1])

remaining.remove(top[1])

row = [top]

y = top[0][0][1]

width, height = top[0][1]

radius = (width**2 + height**2) ** 0.5 / 2

low, high = y - radius, y + radius

for i in remaining.copy():

rectangle = rectangles[i]

if low <= rectangle[0][1] <= high:

row.append((rectangle, i))

remaining.remove(i)

row.sort(key=lambda x: x[0][0][0])

rows.extend(row)

return rows

def find_largest_shapes(image):

contours = [

e for e in get_contours(image) if cv2.contourArea(cv2.convexHull(e)) >= 1000

]

rectangles = [cv2.minAreaRect(contour) for contour in contours]

return [

(rectangle, contours[i])

for rectangle, i in sort_bounding_rectangles(rectangles)

]

def rotate_image(image, angle):

size_reverse = np.array(image.shape[1::-1])

M = cv2.getRotationMatrix2D(tuple(size_reverse / 2.0), angle, 1.0)

MM = np.absolute(M[:, :2])

size_new = MM @ size_reverse

M[:, -1] += (size_new - size_reverse) / 2.0

return cv2.warpAffine(image, M, tuple(size_new.astype(int)))

def int_sort(arr):

return np.sort(np.intp(np.floor(arr + 0.5)))

RADIANS = {}

def rotate(x, y, angle):

if pair := RADIANS.get(angle):

cosa, sina = pair

else:

a = angle / 180 * np.pi

cosa, sina = np.cos(a), np.sin(a)

RADIANS[angle] = (cosa, sina)

return x * cosa - y * sina, y * cosa + x * sina

def new_border(x, y, angle):

nx, ny = rotate(x, y, angle)

nx = int_sort(nx)

ny = int_sort(ny)

return nx[3] - nx[0], ny[3] - ny[0]

def coords_to_pixels(x, y, w, h):

cx, cy = w / 2, h / 2

nx, ny = x + cx, cy - y

nx, ny = int_sort(nx), int_sort(ny)

a, b = nx[0], ny[0]

return a, b, nx[3] - a, ny[3] - b

def new_contour_bounds(pixels, w, h, angle):

cx, cy = w / 2, h / 2

x = np.array([-cx, cx, cx, -cx])

y = np.array([cy, cy, -cy, -cy])

nw, nh = new_border(x, y, angle)

bx, by = pixels[..., 0] - cx, cy - pixels[..., 1]

nx, ny = rotate(bx, by, angle)

return coords_to_pixels(nx, ny, nw, nh)

def extract_shape_helper(box, contour, image, rectangle):

h, w = image.shape[:2]

angle = rectangle[2]

x, y, dx, dy = new_contour_bounds(box, w, h, angle)

empty = np.zeros_like(image)

mask = cv2.fillConvexPoly(empty, cv2.convexHull(contour), (255, 255, 255))

return rotate_image(image & mask, angle)[y : y + dy, x : x + dx]

def extract_shape(rectangle, contour, image):

box = np.intp(np.floor(cv2.boxPoints(rectangle) + 0.5))

shape = extract_shape_helper(box, contour, image, rectangle)

sh, sw = shape.shape[:2]

if sh < sw:

shape = np.rot90(shape)

return shape

def extract_all_shapes(image):

result = []

for i, (rectangle, contour) in enumerate(find_largest_shapes(image)):

shape = extract_shape(rectangle, contour, img)

result.append((shape, contour, i))

return result

def scaled_HuMoments(image):

moments = cv2.HuMoments(cv2.moments(grayscale(image))).flatten()

return -1 * np.copysign(1.0, moments) * np.log10(np.abs(moments))

def load_online_image(url):

return cv2.imdecode(

np.asarray(

bytearray(

requests.get(url).content,

),

dtype=np.uint8,

),

-1,

)

def pairwise_compare(shapes):

l = len(shapes)

comparison = [[] for _ in range(l)]

shapes = [grayscale(shape) for shape in shapes]

for i, e in enumerate(shapes[: l - 1]):

for j in range(i + 1, l):

diff = cv2.matchShapes(e, shapes[j], cv2.CONTOURS_MATCH_I1, 0)

comparison[i].append(diff)

comparison[j].append(diff)

return comparison

for i in range(1, 51):

img = load_online_image(f"https://www.idrlabs.com/static/i/eysenck-iq/en/{i}.png")

shapes, contours, indices = zip(*extract_all_shapes(img))

moments = [scaled_HuMoments(shape) for shape in shapes]

comparison = pairwise_compare(shapes)

print(

i,

np.mean(moments, axis=0),

np.std(moments, axis=0),

np.linalg.norm(moments, axis=0),

[

np.mean([np.linalg.norm(e - f) for f in moments[:i] + moments[i + 1 :]])

for i, e in enumerate(moments)

],

[(np.mean(e), np.std(e), np.linalg.norm(e)) for e in comparison],

)

The output is quite long, and here is a sample:

1 [ 3.07390334 9.20804671 12.8461357 14.51696789 16.68961852 3.3599212

4.38850922] [ 0.02824479 0.5203131 2.20535896 0.83599035 23.43547701 18.90969881

28.06080182] [ 6.87374697 20.62266349 29.14505196 32.51470718 64.33369835 42.94565052

63.50856683] [62.566545021375056, 48.74582883360671, 64.43159607200943, 78.42156717100089, 51.21300989005003] [(0.005014132959887091, 0.002491910083805807, 0.01119841867500928), (0.006020965019003746, 0.00199687700714013, 0.012686928318818815), (0.0030201480551207693, 0.0015969796757999888, 0.006832755918300609), (0.003546920727516889, 0.0012401031295098184, 0.007514919139713742), (0.0033065038420672516, 0.002016290195678553, 0.007745551965042893)]

2 [ 2.97172593 6.44666183 9.8363414 10.60604303 20.86557466 13.8481828

12.76418785] [1.41401183e-02 7.24228229e-02 3.74833367e-02 8.26491630e-02

1.50811464e-01 1.22104849e-01 1.69709762e+01] [ 6.64505641 14.4160837 21.99488771 23.71655326 46.658062 30.9666818

47.48360373] [10.818253126228177, 10.982586601638047, 42.428013449717554, 10.910867281706224, 10.848427217759596] [(0.001506921852584192, 0.0009882097830638086, 0.0036040932535875334), (0.003516907872134406, 0.0008668050736689571, 0.007244305906522502), (0.0015011522238101704, 0.000993415709169907, 0.003600184867628755), (0.0018174060147817805, 0.001260294847499211, 0.004423260211743394), (0.0023787361481882874, 0.0014468593917222597, 0.005568406508908112)]

3 [ 2.61771073 5.30659133 8.8536436 9.50848944 18.86447369

12.7987164 -11.35360489] [ 0.01619639 0.03695343 0.05006323 0.09325839 0.23476942 0.38993702

15.02706999] [ 5.85349117 11.86618664 19.79766544 21.26265136 42.18551197 28.63207925

42.11396303] [9.747008299781871, 37.573686324953485, 10.243633427009456, 9.720464324546901, 9.78238944786298] [(0.0024618215364579227, 0.0012707943194968936, 0.00554093258570563), (0.002364270277469327, 0.0012510519833639265, 0.005349730838090344), (0.0038182383231789296, 0.002023821027669545, 0.008642868839599421), (0.002909711587421565, 0.0016709432962376343, 0.0067107296238834765), (0.004788150219463536, 0.0015576789867383207, 0.010070302150357719)]

4 [ 2.9954428 6.82028526 9.54511531 10.9612486 -21.23828174

-14.38515271 6.91790527] [4.77222668e-03 7.17286208e-02 3.69215392e-02 4.81258831e-02

8.67832272e-02 7.61551081e-02 2.09493605e+01] [ 7.33731573 16.70714266 23.38083696 26.84972479 52.02338757 35.23677779

54.04071356] [17.94732078416027, 35.29165646520066, 17.950699158333215, 18.23280085935311, 17.93185442022226, 36.313920730849] [(0.0012898553531571987, 0.0002516968837579109, 0.002938603540256601), (0.0004973573147971644, 0.0004829472326333535, 0.0015501650365210475), (0.0004903504494842004, 0.0003222701546145148, 0.0013120625287398094), (0.0004903504494842004, 0.00047557236310889273, 0.0015274368004312548), (0.0005065526488026162, 0.0003082944435671216, 0.0013259733216458537), (0.0006724236253112581, 0.0005150535939673667, 0.0018939822287120812)]

5 [ 3.09082421 7.61628388 9.97917351 11.593797 22.4659269

15.53389331 -13.56588549] [2.12577685e-03 5.70692714e-02 2.52947012e-02 3.34935722e-02

5.10378438e-02 2.73210581e-02 1.81105332e+01] [ 6.91129468 17.03100658 22.31418202 25.92462638 50.23546937 34.73489512

50.59766105] [45.27603301509411, 11.424555924626425, 11.571023004898416, 11.439967497985075, 11.423495225541958] [(0.00033414574755881443, 0.00019891946914752526, 0.000777746323212324), (0.00023785531982488395, 0.00014708424579467746, 0.0005593171856111233), (0.0003804657093928465, 0.00018151804352047388, 0.0008430965689582721), (0.000319213439906485, 0.0001910892479572674, 0.0007440761275617035), (0.00023333035037390037, 0.00013786485948325051, 0.0005420323676163391)]

You can run the code to see the full output.

The problem is, how to interpret these numbers, what heuristics should I use, so that when the computer sees these numbers, it then knows whether all shapes are identical or not? What cutoff should I use?

Upvotes: 0

Views: 326

Answers (1)

Reputation: 49

Using openCV or with handcrafted techniques, you may be able to solve simple problems like figuring chirality of images, and finding degree of intersections. But, solving problems like (https://www.idrlabs.com/static/i/eysenck-iq/en/28.png) will require complex heuristics that involve reasoning like in GPT models.

If you actually want to solve this, you may try GPT-4Vision. Those models will consider the user prompt unlike in this approach. But if you are here for learning, you can try more handcrafted techniques on specific problems, like:

- Using the numbers below each figure to identify the number of figures and their rough locations. You may use those 'Number' pixel locations as seeds to clustering algorithm that clusters the 'Shapes' (you have produced in your code).

- To solve problem of disconnected figures in https://www.idrlabs.com/static/i/eysenck-iq/en/12.png & https://www.idrlabs.com/static/i/eysenck-iq/en/16.png, you may want to try out morphological operations, but I believe it may not work because of the proximity figures to each other. You can perform dilation to connect all the nearby components, and then erode again so all the figures which got connected will be separate again.

Upvotes: -2